Under the hood: The hardware behind Asus' H200 GPU server

Enough GPUs to run the 671B version of DeepSeek-R1.

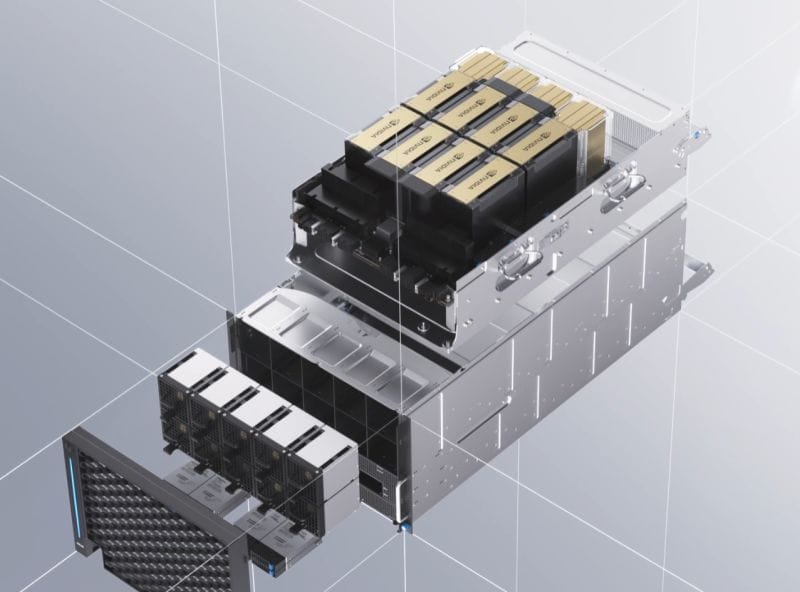

This Asus GPU server measures just 7U but packs enough GPU oomph to run the largest DeepSeek-R1 "reasoning" AI model locally*.

I've gone with motherboards from Asus for the last few PCs I built. But when I visited their office last week, I didn't look at PC motherboards - but something way more powerful.

I got a close-up of one of the latest Asus GPU servers crammed with powerful Nvidia H200 GPUs.

*671B at 4-bit quantisation needs 5x H200; FP16 needs 10x H200.

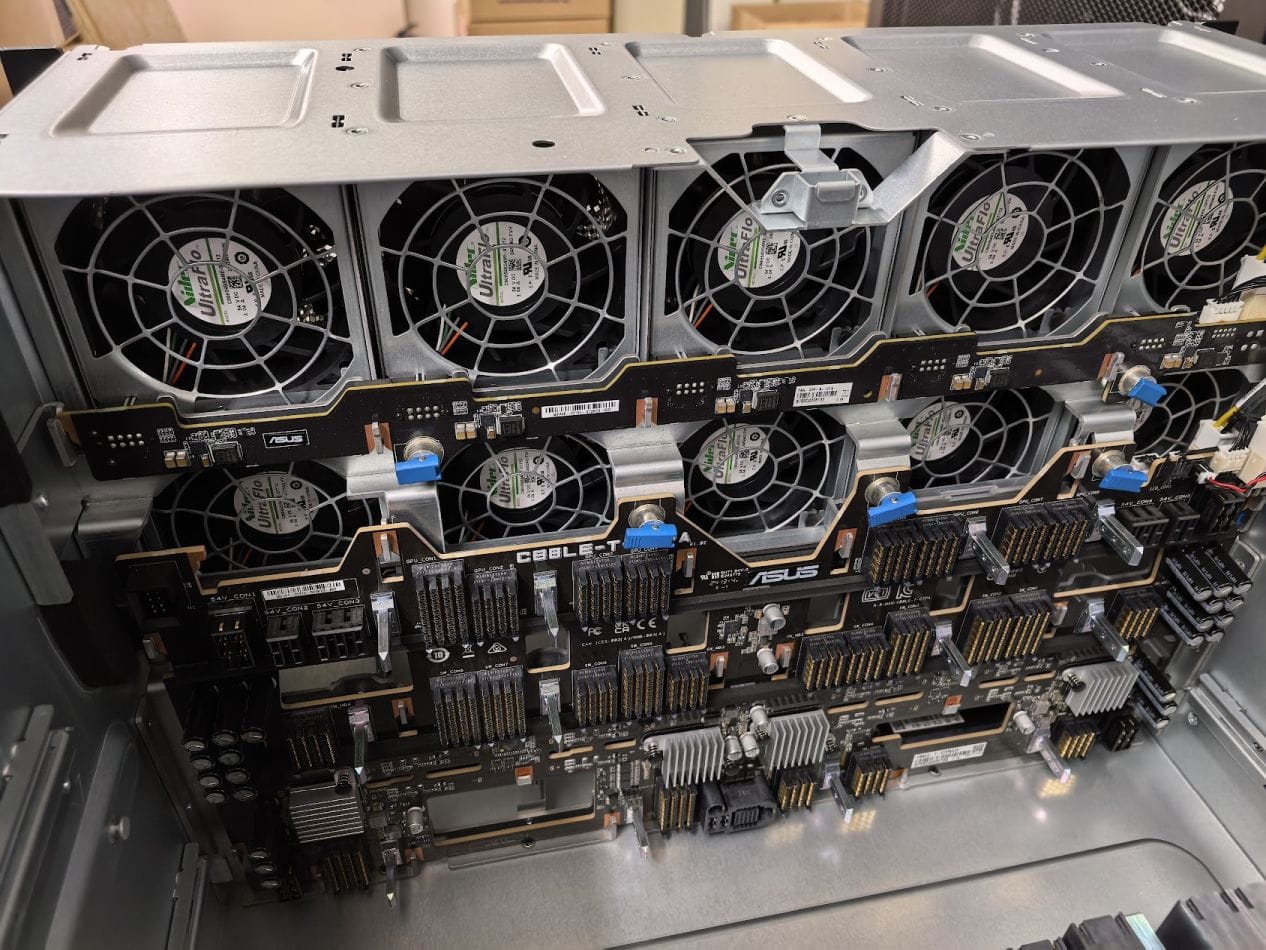

Photo Captions: (Left) Side view. (Middle) 10x fan units at the front. (Right) Zooming in on a dual-fan unit.

ASUS GPU server

The particular model (ESC N8-E11V) I saw is air-cooled, but I was told Asus does offer variants that support liquid cooling (direct-to-chip).

Specifications:

- Dual Intel Xeon.

- 8x H200 GPUs (SXM).

- 32 DIMM slots, 10 NVMe.

- 7U, standard 19-inch rack.

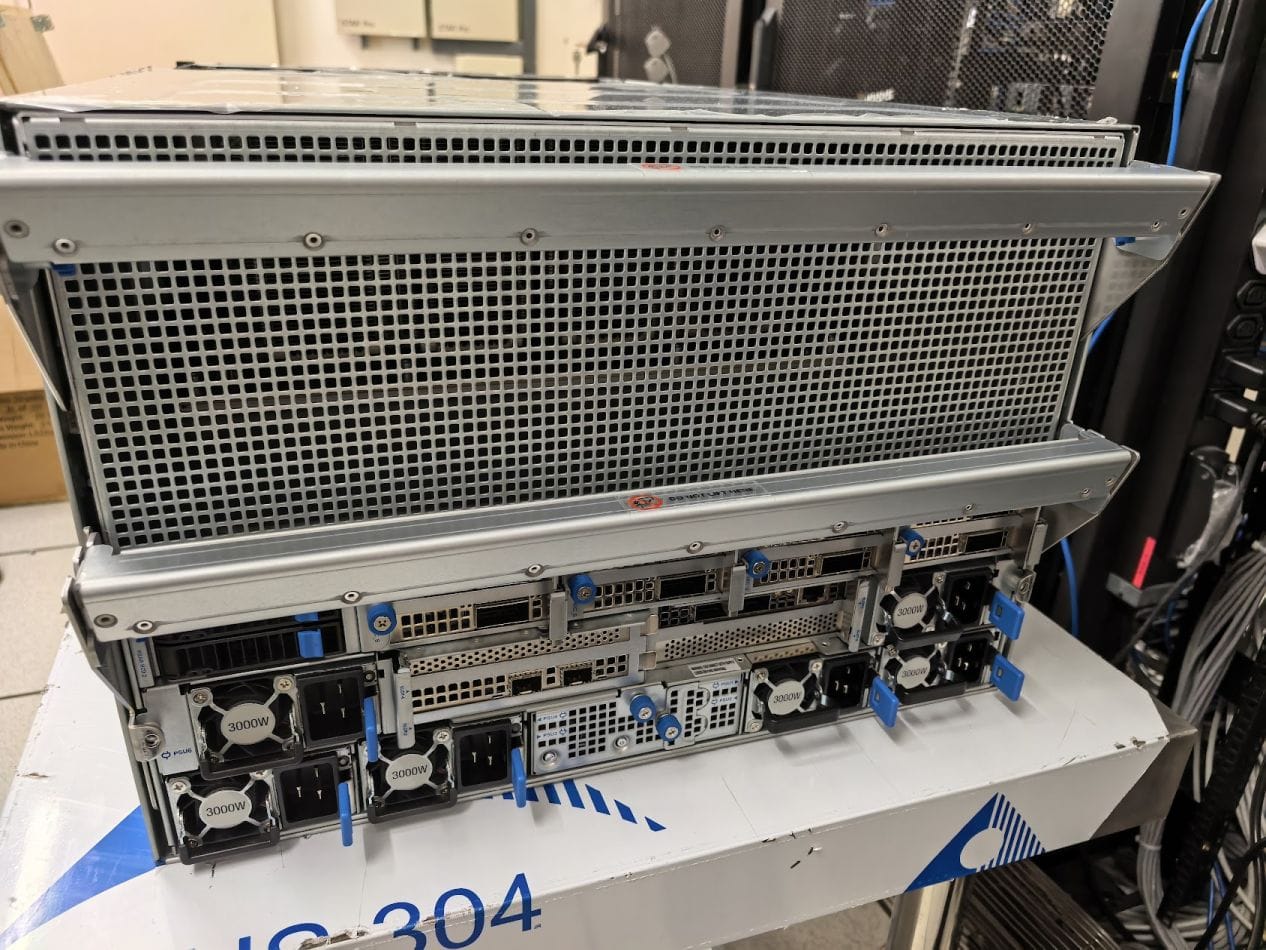

There are six 3,000W PSUs. By default, four of them are active, while the remaining two function as hot spares. If desired, the PSU mix can be configured differently.

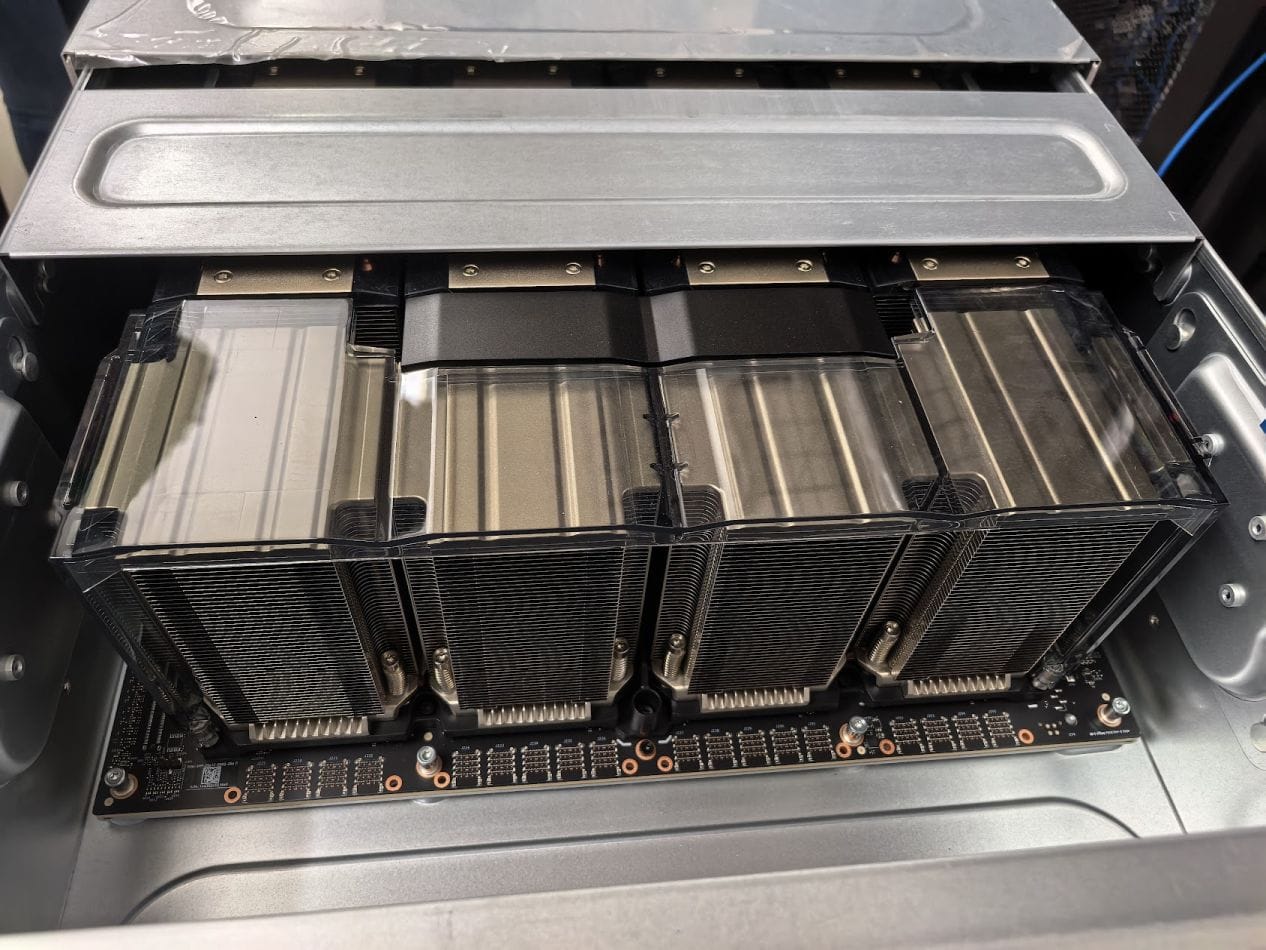

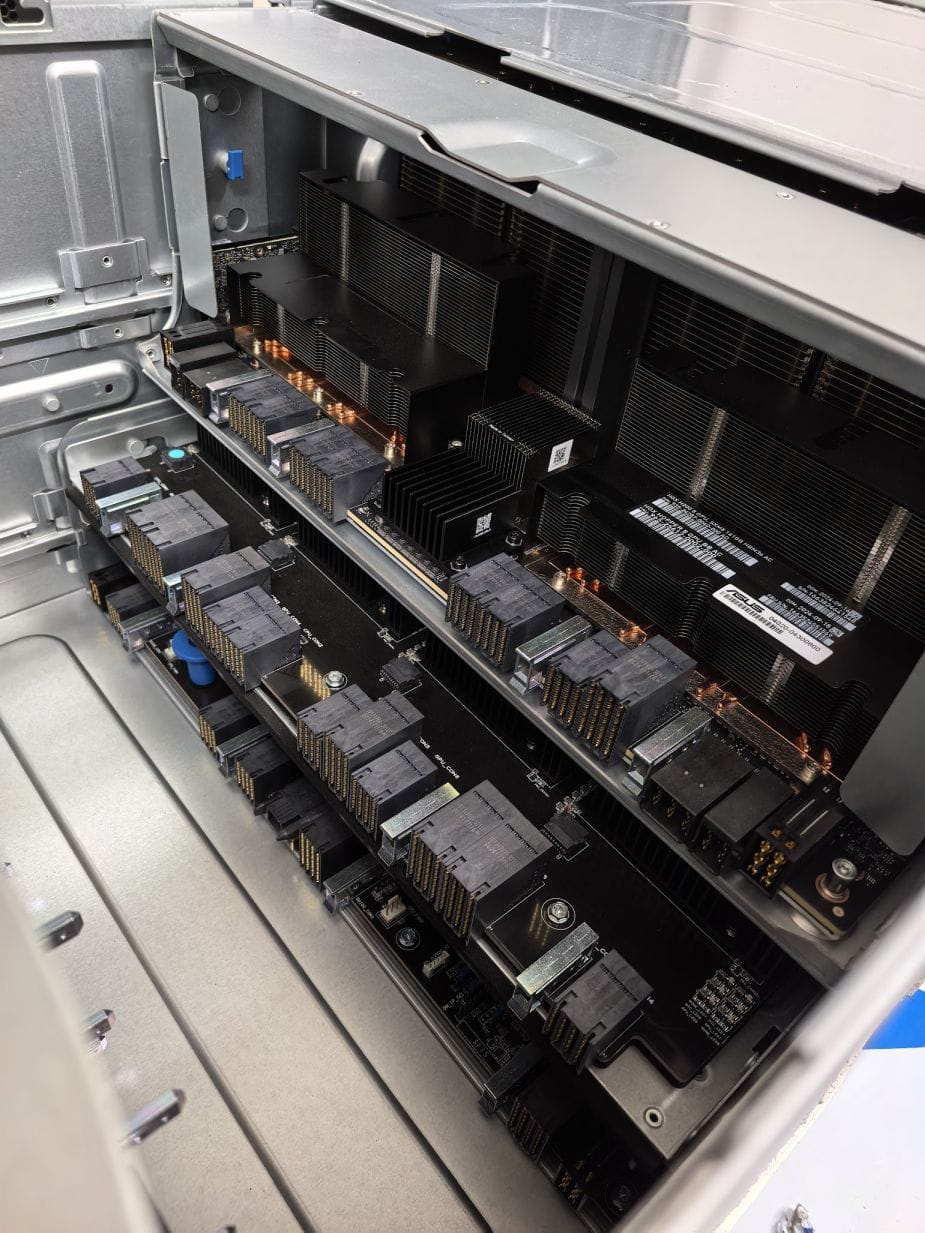

Photo Captions: (Left) Massive heat sinks on H200 GPUs. (Middle) The midplane board behind the front fans. (Right) 3 layers: GPU board, PCIe switch, motherboard.

What caught my attention

Here are two things that caught my attention.

a) Clean, modular design

b) Standard chassis dimensions

- Clean design:

A clean design minimises overall cable usage for reduced clutter. Indeed, key sections are connected by a midplane board (Photo 6) that serves as a riser.

There are 3 key sections it connects: The server board at the bottom, a PCIe switch in the middle, and the GPU board at the top.

GPUs and network components can be removed from the back for servicing or replacement. The main high-speed fans can also be hot-swapped from the front.

- Chassis dimensions:

It is worth noting that the GPU server uses a standard 19-inch chassis with a manageable depth of around 0.9m (885mm), allowing it to fit into practically any rack.

Photo Captions: (Left) Back view. Note the PSUs, networking ports. (Right) Each layer can be pulled out for servicing.

Maintenance

Finally, I asked about repairs, as I've read elsewhere that Nvidia GPUs occasionally fail. As with any enterprise offerings, SLA details will vary.

Interestingly, there is an option for self-repair with spare GPUs kept at the data centre. With the ability to easily open up the chassis, downtime can be kept to a minimum.

Thank you, Morris Tan of Asus for sharing more about GPU servers!