The two upgrades behind GPT-4o's stunning visuals

Is this the "ChatGPT" moment for carton artists and graphic designers?

OpenAI just unveiled GPT-4o image generation, and it might be the "ChatGPT moment" for cartoon artists and graphic designers.

OpenAI has launched a new image-generation tool, built into its GPT-4o model. And it is very, very good.

Key improvements

How is it better? There are two key improvements:

- Better text rendering.

- Ability to follow complex prompts.

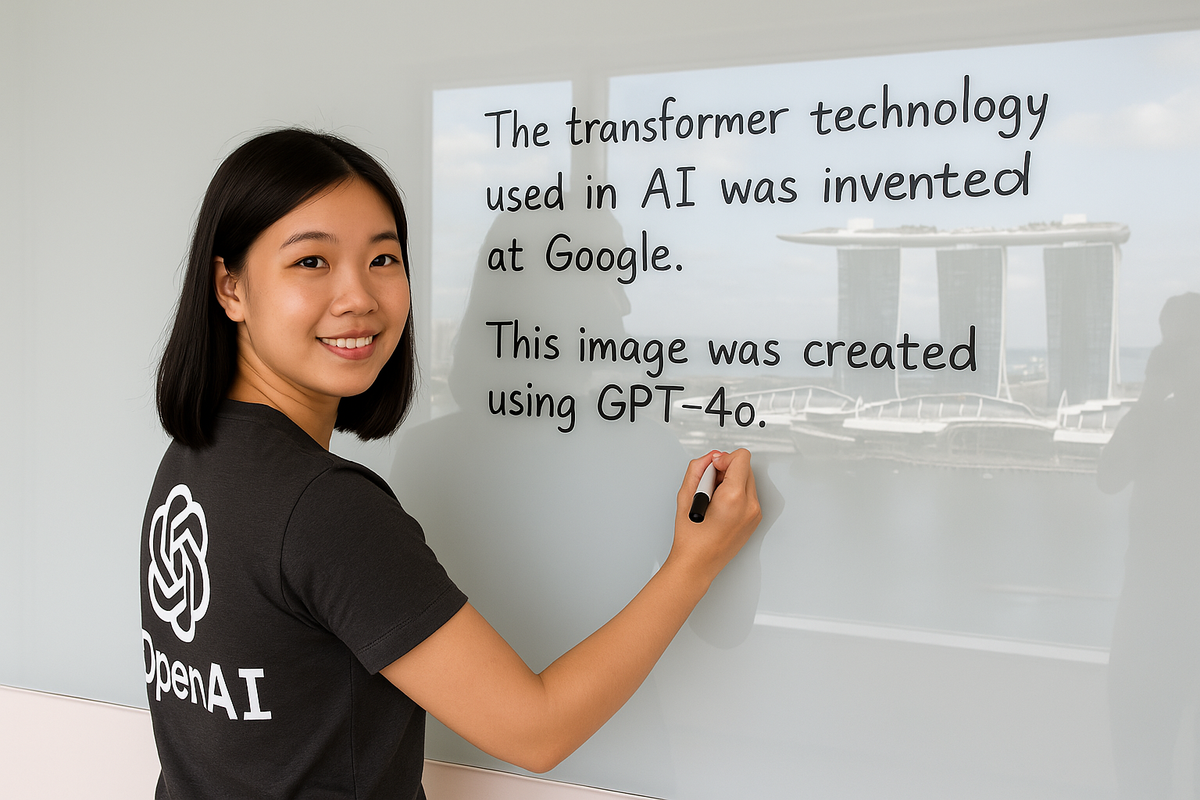

Many AI models struggle to render text correctly in images. The new version of GPT-4o produces text far more consistently. It's still not perfect - but is good enough to be used in more scenarios.

More importantly, the new model can handle complex prompts with greater accuracy. Additional instructions are generally also adhered to.

Under the hood

Apart from the ability to follow detailed prompts with attention to detail, GPT-4o can handle more items within the image - as many as 20 different objects.

As explained by OpenAI, the new model offers tighter "binding" of objects to their traits and relations for better control.

This means mirrors reflect images and objects don't just float in midair. And yes, designs get rendered correctly on clothes and objects.

Impact of GPT-4o image generation

What is the impact of GPT-4o image generation? It is still in its early days, but the brunt would probably be felt by marketing agencies, illustrators, and media production teams.

For instance, the ability to produce text and follow prompts consistently means marketers could potentially create simpler images without engaging an external agency.

Finally, with the ability to easily tack logos into images that are far more realistic than before, I suspect brands are in for a massive headache.

PS: The above photo is edited from one the one below, which was shared on OpenAI's blog post announcing GPT-4o image generation.