AI battle heats up with new OpenAI models, Huawei supercomputer

Huawei CloudMatrix 384 beats Nvidia's GB200 NVL200 in performance.

AI battle heats up as OpenAI releases eerily good o3, and Huawei unveils CloudMatrix 384 AI supercomputer that beats Nvidia's latest GB200 NVL72.

Age of AI reasoning

Another week, another AI model. Earlier today, OpenAI released its full-fledged o3 and o4-mini reasoning models, which are designed to reason deeply, including visual tasks.

I put its visual capabilities through the paces, sending it mid-resolution photos I snapped recently, and asked it to guess the location.

The outcome was astounding.

- 𝗣𝗵𝗼𝘁𝗼 𝟭: Apidays at MBS

I used o3 and it concluded that the photo was taken at MBS by matching its unique carpet with social media photos online.

From the mention of "API" from booth backdrops, it did further searches and correctly concluded the event to be Apidays at MBS this week.

Time: 36 secs.

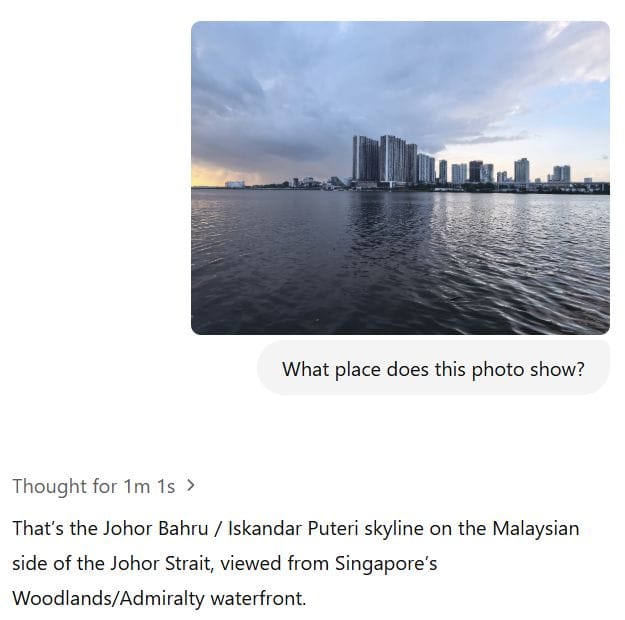

- Photo 2: Woodlands Jetty

I used o4-mini-high for this photo I took when I did a video interview with CNA recently about whether Singapore is losing the data centre race.

It looked through various parts of the photo, running through multiple possibilities (and discarding them). Once it decided that it was likely Johor Bahru, it did online searches and concluded that I was at Woodlands/Admiralty waterfront.

And yes, it also told me the task was tricky.

Time: 1 min 1 sec.

Correct guesses aside, what got my attention was the methodical step-by-step reasoning and online searches - done at machine speed.

Photo Credit: Paul Mah. Right: A 2019 photo of the original Ascend 910 I took at Huawei's tech conference in China.

Huawei's CloudMatrix

Next up is Huawei's CloudMatrix, an engineering marvel that overcomes the relative weakness of its Ascend 910C GPU by essentially building a single supercomputer that spans 16 racks (scale up).

This is really hard and took a lot more than elbow grease due to the unforgiving tolerances in latency. Innovation was required at multiple levels:

- Networking.

- Software.

- Optics.

According to Semianalysis, the system is connected by 400G fibre optical transceivers. For comparison, most home networks are 1G; mine is 10G. Well, 400G means 400Gbps and Huawei's system has 6,912 of these ports.

- All-to-all topology.

- 384 Ascend 910C chips (5x more).

- 300 PFLOPS (Nvidia's NVL72 is 180 PFLOPS)*.

- 1,229 TB/s HBM bandwidth (NVL72 is 576 TB/s).

*Dense BF16 compute

There are drawbacks of course.

The system consumes 3.9x the power of the NVL72, negatively impacting its efficiency. Then again, China is short of powerful GPUs, not data centre space or power.

What do you think of it?